ChatGPT is a powerful tool that students must learn to control – experts weigh in on the future of AI in education

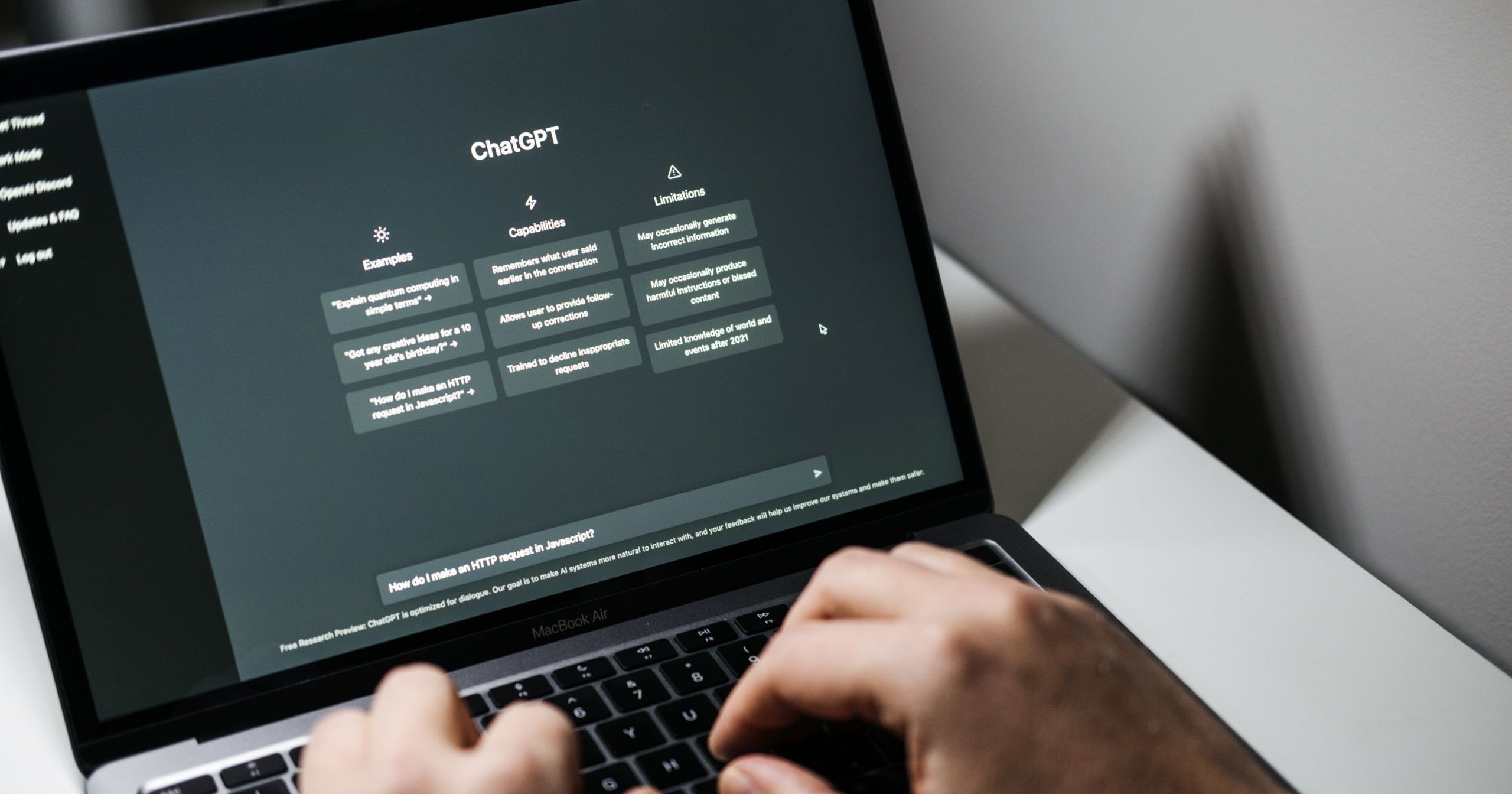

Photo: Shutterstock.

Is it a cure for writer’s block, or a tool for cheating? Will it bring the end of education as we know it? The latest developments in AI text generators, most famously ChatGPT, are forcing us to rethink writing, teaching and learning.

Since the tech company Open AI released its (for now) free chatbot ChatGPT-3 in November 2022, it has become clear that it has vast implications for society in general, and education in particular.

ChatGPT can write a Haiku, a love letter, a cover letter and software code. It can correct errors in your software code. It can successfully pass the Danish national citizenship test, pass an MBA exam and provide instructions on how to remove a sandwich from a VCR delivered in Biblical verse.

In education circles, the test-taking abilities of the chatbot have received a lot of attention. Also at CBS, IT support began receiving questions about its permissibility in exams as the hype grew at the end of last year.

So far, the stance of CBS, as well as other Danish universities, has been that the use of ChatGPT and similar tools is not allowed for exams. The reasoning is that it does not meet the requirement of solving the exam questions individually.

Internationally, some institutions have gone even further. New York City’s education department, for example, has blocked access to ChatGPT on all school devices and networks.

AI on the curriculum

Henrik Køhler Simonsen, external lecturer at CBS Department of Management, Society and Communication, wants to shift the conversation away from cheating and bans.

Partly because students are already using the tools and there is no way to tell if a text is human or AI generated. But also, because students, as modern workers, will need to know how to use AI. Therefore, he argues that AI should be part of the curriculum.

Henrik Køhler Simonsen is an external lecturer at CBS Department of Management, Society and Communication and a visiting professor at Saint Paul Business School in Brazil. He has studied AI in education over the last four years. Photo: Private.

“If you ask me, we should not prohibit using ChatGPT. It was the same when calculators came into use 45 years ago. There was a lot of opposition because people argued that students wouldn’t learn to add and subtract. This is more or less the same. We should not ban it but embrace it.”

Generative AI, i.e., artificial intelligence that can generate novel content, such as text, has already been around for a couple of years. When a customer service chat churns out sometimes useful, often useless generic responses to your questions, there is likely an AI text generator behind it.

But last November when the American company Open AI made ChatGPT-3, the third version of the chatbot available for free, the buzz around generative AI escalated.

An explosion gone unnoticed

Henrik Køhler Simonsen has been researching AI in education since 2018. At CBS, he teaches courses on branding and communications management, among others. In his classes, he presents the tools to his students, though often they already know more than him.

“They already use these tools. That is not new. We need to change assessments and curriculums. It doesn’t make sense to ask them to write a synopsis based on facts. They can ask an AI to do that in two seconds.”

Do you think students also use it to cheat?

“Open your eyes. All young people I know already use ChatGPT, RYTR [another AI writing tool] or other AI text generators. My colleagues, children, students, everybody, even high school pupils are using it.

“We’ve forgotten that a text generator can, in fact, help students overcome the blank screen syndrome. If you want to do a piece and you know what you want, but you can’t get started, you can do that by using an AI text generator.”

They already use these tools. That is not new. We need to change assessments and curriculums.

Henrik Køhler Simonsen, expert on AI in education and external lecturer at CBS

He calls the arrival of AI text generators a “Tunguska event”. The Tunguska explosion (caused by an asteroid) occurred in 1908 in Siberia, Russia. It is the the largest impact event on Earth in recorded history. Though it destroyed vast areas of land, it took many years to realise the true extent of its impact.

That is how Henrik Køhler Simonsen feels about generative AI.

“Not to be arrogant, but I already argued in 2019 that this would revolutionise how we teach and learn. The Tunguska analogy is that we have been hit, but it seems as if the education sector, at least in Denmark, is still reluctant to change, even though we’ve been affected by such a huge event.”

Why do you think that is?

“I think it’s difficult to change a system that’s so heavily regulated. Perhaps it’s also difficult for teachers to change and know how students can work collaboratively with an AI. We want to teach our students digital skills and we want to prepare them to be active, responsible and digital citizens. So, if we want that we also need to teach them how to use an AI,” he says.

Some of his research projects have involved studying how humans feel about the potential of AI. According to Henrik Køhler Simonsen, the study subjects were “thrilled by the prospect of seeing AI and impressed by the output”. Today, those outputs are even better than when the study was done.

Tunguska destroyed vast areas of land and forest. Is that also part of the analogy? What will AI destroy?

“If you ask ChatGPT a question, you will have a, more or less, ready-made synopsis that, of course, short-circuits a number of academic processes we usually like students to go through. I’m not saying we should remove that from the way we teach. But obviously, some things will be destroyed. Just like the internet has changed the traditional way of talking about fact-based knowledge. It doesn’t make sense anymore.”

A power that needs to be tamed

Even though he is thrilled about the potential of AI text generators and AI in education, Henrik Køhler Simonsen is not certain that it is only good news.

“It remains to be seen. One school of thought is very much against using AI; another school is very much in favour. To be frank, there’s no consensus in neither research nor education that this is totally a good idea because it has some serious drawbacks.”

Elon Musk, one of the early funders of Open AI, the company behind ChatGPT, pulled out of the company, in part due to fear of its malicious potential.

Should we be worried?

“Yes, I’m worried and we should be. It definitely calls for more regulation in some areas. It can be misused and used by evil forces. I acknowledge that.”

However, exactly because it is a powerful tool, he thinks we must also control it.

“If we want students to become digital natives and give them the skills they need when they leave CBS, we should teach them how to sort bad information from good and be able to critically reflect on the use of AI. We should teach them how to edit the information they insert into ChatGPT. Pardon my French, but it’s shit in, shit out, if you feed it with shit, you get shit.”

We should teach students how to work with AI, edit the output and insert what I refer to as world knowledge, relational knowledge, social knowledge, emotional knowledge, market knowledge, customer knowledge – all the types of knowledge it cannot yet generate.

Henrik Køhler Simonsen, expert on AI in education and external lecturer at CBS

Henrik Køhler Simonsen has coined the terms “pre-edit”, “mid-edit”, and “post-edit” to describe where and how humans should interact with AI text generators to make them useful. These are the areas he thinks students should learn.

“We need to prime the AI with high-quality content before we start generating. That is pre-editing. Then, help steer it in the right direction while it generates, that’s mid-editing. And then post-edit the output.

“So, we should teach students how to work with AI and how to edit the output and insert what I refer to as world knowledge, relational knowledge, social knowledge, emotional knowledge, market knowledge, customer knowledge – all the types of knowledge it cannot yet generate.

“If we start using AI without teaching how to be critical of the results it produces, that would be a real danger. I’m also afraid if we teach students to use this, when will they learn to write a coherent argumentative text. That’s the most dangerous aspect. And in fact, using AI requires more knowledge of the students.”

Are we all on the way to becoming editors who let robots do the writing?

“Yes. That’s the short answer. Our tools, our jobs will be changed significantly when it comes to producing text. The past five years, we’ve discussed image recognition as the next big thing in AI, but we already know that’s not the case. The next big thing is text production. We are already consuming a lot of AI-generated text. I honestly believe we will see examples where people primarily use AI to generate text and then edit and improve it afterwards. Numerous companies already use ChatGPT or other AI text generators in marketing and social media.”

However, there is one area that he would like to leave to the humans.

“I think it would be unethical to let AI assess students. That is unethical – and reminds me of Blade Runner.”

One tool among many

Another CBS researcher who has been following the development of Generative AI is Anoush Margaryan, professor of learning sciences at the Department of Digitalization at CBS. She reiterates many of Henrik Køhler Simonsen’s points, though with a bit less fervour.

This chatbot and generative AI tools are often trained to use the pronoun ‘I’. But if you think about it philosophically, is it appropriate for a machine to say I?

Anoush Margaryan, professor of learning sciences at the Department of Digitalization at CBS

“I’ll keep an eye on it, but I don’t think I will be using it regularly. I don’t expect it will disrupt my scholarly practices,” she says.

She describes ChatGPT as “one of a myriad of new and interesting developments with implications for teaching, learning and research”. She is a bit surprised by how much attention ChatGPT has generated – considering there are many other similar tools on the market.

“We should focus on cognitive principles and not chase after each tool,” she says.

As a researcher studying how people learn in digital settings and in the context of new digital work practices, her concern is with the users’ ability to understand the tool and benefit from it. Anoush Margaryan wants to see more research on AI and learning to support its use in teaching.

Anoush Margaryan, professor of learning sciences at the Department of Digitalization, CBS. Photo: Private

“It’s a positive development, but with every new technology, an important question arises: do the learners and lecturers have the skills to use these technologies in a pedagogically beneficial way?”

Do you think students should be using it?

“I wouldn’t say should but could. Students can learn about these technologies.”

Conversations with a chatbot

One example she mentions is that language learners can have conversations with the chatbot. If they want, they can also ask it to correct their grammar mistakes.

Should they wish to have a philosophical conversation with the tool, it can also do that. Anoush Margaryan has tried.

“This chatbot and generative AI tools are often trained to use the pronoun ‘I’. But if you think about it philosophically, is it appropriate for a machine to say I?” she asks with a laugh.

“Of course, the designers of these machines make them sound like humans so that humans interact better with them, but that also blurs the line.”

When playing with ChatGPT herself, Anoush Margaryan asked the chatbot directly: “Should chatbots be allowed to use the pronoun ‘I’”?

The answer: “Chatbots are not sentient beings and do not possess consciousness or self-awareness, so it is not necessary to assign them pronouns such as ‘I’. However, it is common for chatbots to use first-person pronouns such as ‘I’ or ‘we’ in order to make their responses sound more natural and human-like. It is a design choice and ultimately up to the creators of the chatbot.”

This exemplifies one of the risks with generative AI – that it blurs the line between what is machine created and what is human created.

“One important implication is how people can ascertain what’s real and what’s not. I think one implication for skills development is how universities can teach students to function productively as citizens in work settings and societal settings where such tools and media become more widespread.

“We can’t always tell, other than being sceptical about information we hear and trying to build our own understanding of things. But we live in a reality where large numbers of people might not have the time or ability to do so.”

Anoush Margaryan agrees that banning people from using AI is not productive.

“I don’t think it’s a good idea to prohibit. We need to think about how we can construct authentic assessments that are based on real-world projects and application of knowledge. The way forward is to develop our cognitive abilities to be able to function with these technologies in the future.”

Comments